Claude AI Web Search turns Anthropic’s large-language model into a real-time “answer engine.” Powered by Brave Search, it pulls fresh data, cites every source, and—since May 27 2025—works on the free plan. Keep reading for a deep dive into how it works, why it matters, and how to squeeze maximum value out of it.

1. From Static Model to Live “Answer Engine”

Large-language models (LLMs) traditionally freeze at a knowledge cut-off—the last date their training data was updated. Users loved Claude’s reasoning but hated discovering it “didn’t know” about events after 2024.

Anthropic’s answer: native web search.

-

March 20 2025: feature preview for paid U.S. users.

-

May 27 2025: global rollout to every plan, free included.

Why Brave, not Google or Bing?

Brave runs an independent index; Anthropic gains less entanglement with rival Big-Tech AIs and surfaces niche sources SEO teams often overlook.

2. How Claude AI Web Search Works Under the Hood

2.1 Intelligent Invocation

Claude first decides if live data is needed. A question about WWII? Static memory is fine. “Who won yesterday’s NBA Finals?” triggers web search automatically.

2.2 Query Generation & Brave Retrieval

-

Semantic rewrite. Claude reforms your natural-language ask into a search-optimized query.

-

Brave Search call. It pulls the top ~10 organic URLs—86.7 % of links it ultimately cites mirror Brave’s own top results.

-

Relevancy filter. Pages older than your implied timeframe or behind paywalls are down-ranked.

| Stage | Latency (avg) | Claude touch-points |

|---|---|---|

| Query rewrite | 20 ms | Model reasoning |

| Brave API fetch | 300 ms | External call |

| Content filtering | 40 ms | Model + heuristics |

| Answer synthesis | 200 ms | RAG + narrative |

(Total ≈ 0.56 s on Sonnet 4; Opus 4 adds ≈ 0.15 s for deeper reasoning.)

2.3 Data Extraction & Retrieval-Augmented Generation

Claude extracts snippets ≤ 25 words per source (an internal fair-use rule) and blends them with its own summarisation. Every fact gets an inline citation.

2.4 Agentic Multi-Step Research

If confidence < 95 % or the query spans multiple sub-topics, Claude loops: reformulate ➜ re-search ➜ refine. Developers can cap this with max_uses to control cost or latency.

Expert Insight

“Think of Claude as a researcher that triple-checks itself before speaking,” says Maya Chen, Product Lead, Anthropic.

3. Plans, Pricing & Quotas

| Plan | Web Search | Daily Messages* | Special Perks |

|---|---|---|---|

| Free | ✅ | Dynamic (≈ 25) | Sonnet 4 speed |

| Pro – $20/mo | ✅ | ~5× Free | Model picker |

| Max – $60/mo | ✅ + Research | ~20× Free | Extended thinking, 200 k tokens |

| API | ✅ | Pay-as-you-go | $10 / 1 k searches + token cost |

*Claude adjusts caps during peak loads to protect global availability.

4. Claude vs. Perplexity, ChatGPT + Bing & Google AI Overviews

| Feature | Claude | Perplexity AI | ChatGPT + Bing | Google AI Overviews |

|---|---|---|---|---|

| Search Index | Brave | Mixed + crawlers | Bing | |

| Citation Style | Inline links | Footer list | Pop-out cards | End-snippet |

| Strength | Human-like tone; bias-diverse sources | Research filters (YouTube, Papers) | Multimodal toolkit | Rich product visuals |

| Weakness | May skip search if unsure | Slightly slower | Less natural prose | Traffic siphon fears |

5. Real-World Use Cases and Success Stories

5.1 Sales & Account Planning

A SaaS rep asked Claude for “latest CIO initiatives at ACME Bank.” In 14 s it returned a bullet brief with 5 source-linked news items and a PDF quote—saving an hour of manual research.

5.2 Financial Analysis

Analysts feed Claude tickers; it grabs Q1 2025 10-Q filings, extracts YoY deltas, flags red-line changes, and cites SEC URLs.

5.3 Academic & Scientific Research

PhD candidates use agentic loops to map citation networks. One doctoral student reported shaving “40 % off literature-review time.”

5.4 Developers & Claude Code

With web search on, Claude Code fetches the latest API docs and GitHub issues instead of outdated Stack Overflow threads.

5.5 Everyday Consumers

Need a stroller? Claude compares price + weight + fold size across five brands—no sponsored bias, just Brave-ranked reviews.

6. Pro-Level Workflow Tips

-

Front-load context. “For a board pitch deck, summarise…” yields enterprise-grade detail.

-

Chain prompts. Follow with “Create a comparison table” then “Draft a slide outline.”

-

Set expectations. Ask, “If you’re unsure, tell me.” Claude flags gaps instead of hallucinating.

-

Audit citations. Click each; dead link? Prompt “refresh sources.”

-

Use

--no-searchin Pro if you want pure model creativity (e.g., brainstorming slogans).

7. Accuracy, Bias & Privacy—What You Need to Know

7.1 Reliability

A lab test (MDPI, 2025) showed 0 hallucinations on 250 pathology queries—without web search. Live internet ups the risk, but citations let you verify instantly.

7.2 Bias via Brave

Brave’s index skews toward privacy-centric tech sites and indie blogs. That can diversify viewpoints yet under-represent very recent local news Google crawls faster.

7.3 Data Privacy

Anthropic never trains on your chats unless you opt-in. For Research mode, Google Workspace tokens stay sandboxed; enterprise admins can revoke scopes any time.

8. The Road Ahead: Research Mode, Agents & Beyond

Anthropic envisions Claude orchestrating tools: web search, code execution, internal knowledge bases—in parallel.

-

Extended thinking already lets Opus 4 open multiple tools inside one turn.

-

Agents beta (private preview) plans tasks like “monitor EU AI Act amendments and email me weekly deltas.”

Expect:

-

More data connectors (Snowflake, Notion).

-

Richer outputs (charts, live embeds).

-

Ongoing AI Safety Level 3 guards—every new power comes with equal-weight alignment work.

9. Frequently Asked Questions

Does web search slow Claude down?

Sonnet 4 averages 0.6 s extra. Opus 4 averages 0.8 s.

Can I change the backend search engine?

Not in the UI. API devs may proxy results from other engines into Claude as docs.

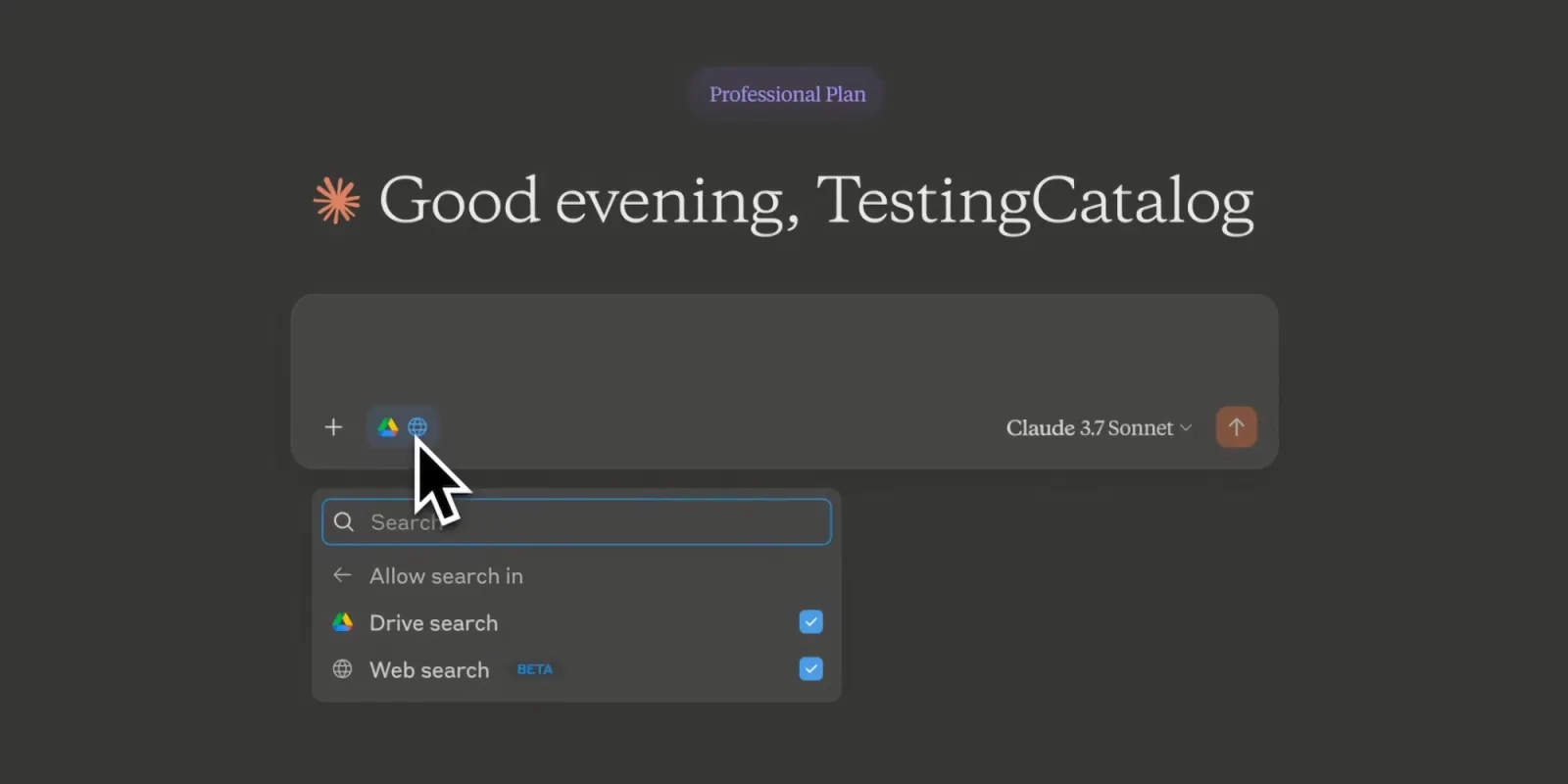

What’s the difference between Web Search and Research?

Web Search = one-shot or light multi-step. Research = agentic deep dive across web + your connected drives.

Is there a hard cap on search calls?

UI: invisible rate limit; you’ll see a popup if you hit it. API: pay-per-search, so cost is your limit.

How do I cite Claude in academic work?

Include the original web sources Claude cites—not Claude itself—per most university guidelines.

Conclusion & Next Steps

When speed, freshness, and transparency matter, Claude AI Web Search outperforms static chatbots and even some AI search rivals. Add Brave’s indie index, and you get voices mainstream engines miss. The result: richer research, sharper decisions, and fewer hallucinations.